Rust Browser 7: Rendering

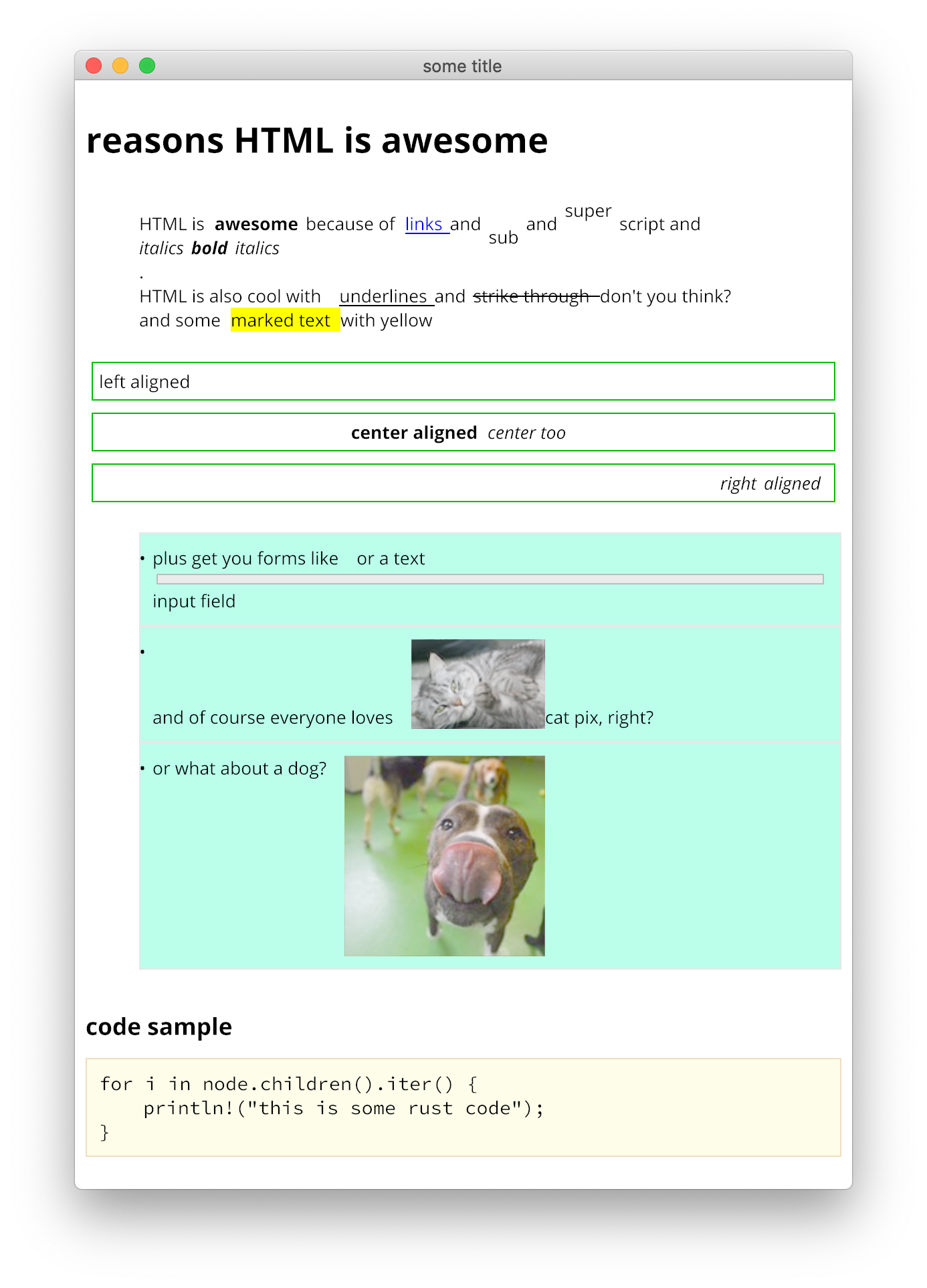

Rendering. The big payoff. This is where we actually get to see something drawn to the screen. This is where the mini-browser starts to feel real. It’s also where the code is straight forward and the hard part is picking the library.

Before we get into the library choices let’s discuss what rendering actually entails.

Layout to Rendering

Last time we covered layout, the process of turning style values and the document nodes into layout boxes, and then turning those into render boxes. Render boxes are literally just boxes. A tree of structures that contain pixel positioned rectangles of color and text. Drawing them is quite simple: just recurse the tree and draw them.

If we are doing offline rendering then we can traverse the render tree and draw into a buffer, then save it. If we are doing live rendering to the screen then we traverse the render tree and draw to some graphics surface. Again, quite simple. For every box in the tree we do the following:

- if it has a background color, draw the background rectangle.

- if it has a border, draw the border rectangle

- if it has text draw the text. No layout is required here. We’ve already split it up into line boxes and inline boxes. We’ve already determined the font size, family, weight, etc. Just draw the text and move on.

So you can see that conceptually the render phase is very simple. And yet I had to rewrite this part several times. Why? Because of libraries.

How Rendering Really Happens Today

In the olden days of programming we would be given a drawing surface object with functions like drawLine, fillRect, and drawText on it. If it was even older then we’d just get a pointer to any empty bitmap and told to fill in any pixel values we want. Today if we want to draw to a PNG image then we can still basically do the same thing. Drawing to the screen, however, is far more complex.

Today’s drawing environment has to deal with HiDPI, graphics acceleration, caching images, high resolution font drawing with hints, font metrics, and working with platform specific window APIs. In short it’s hard to draw something to the screen that works across platforms.

I could have chosen to support just one platform, like macOS, but even then we have to decide on OpenGL vs Metal, wrap native Cocoa/Objective-C apis, and learn a whole lot of deep macOS specific tech. I didn’t want to do that.

To avoid working with platform specific stuff we need an API which hides it all for us, so I started looking.

The State of Graphics in Rust Today

A look at lib.rs shows 428 crates in the graphics category. There are too many to choose from. Some are built on OpenGL, but others consider that old tech and are rebuilding on top of Vulkan (the successor to OpenGL), or on top of a layer on top of Vulkan, or on a layer which implements Vulkan on top of a platform specific Metal or DirectX API. And none of them are mature. There are no obvious choices. Read A Guide to Rust Graphics Libraries to see how much of a mess it is.

The second thing to consider is we need more than just drawing. We want to open a window, get mouse and keyboard events, draw text and rectangles. With native APIs this is all handled in one place, but in the rust world there are different libraries for each of these parts. But I don’t want to deal with all of that. I’m not writing a high performance game. I just want to draw a few things to the screen with as little fuss as possible. I want one stop shopping.

Geez

After some research I started with Geez, a Rust library to create a Good Game Easily, according to the tagline. I was able to get it roughly working but eventually abandoned it because there weren’t any examples that showed how to layout text. It really wants you to delegate text layout to the library. I couldn’t find any way to get text metrics, which is essential for a browser. So… on to the next library.

Raqote

A bit more research pointed me towards Raqote, a Rust 2D graphics library. It draws paths, images, has blend modes, and does text. It’s used by Servo for the canvas implementation backend. Sounds perfect.

A quick look at an example shows that it uses minifb for opening windows, font kit for loading fonts, and does the actual drawing itself. Cool.

I ended up using Raqote for the first few weeks. Opening a window and drawing text and images was pretty easy. I could get metrics from the fonts using font-kit, and it even handled font resolution the way CSS specifies. A nice win!

Still, after a while I started noticing the limitations. First minifb was slow. It appears to render everything into a giant bitmap which it then blits to the screen all at once.

Second, the font rendering looked horrible. There appeared to be no proper hinting and I couldn’t figure out how to scale it to use hi-dpi rendering on my iMac, so the text always looked pixelated. Since a browser is 99% text, this wouldn’t do. Time to move on.

gfx_glyph

The text rendering library gfx_glyph seemed to be the gold standard of drawing text on Rust. According to the specs it handles text beautifully and will give me all the metrics I need. Furthermore it’s blazing fast thanks to a smart texture atlas for glyph caching. Sounds awesome. Of course I need a way to open windows and lots of people use glutin, so I started here.

This got me a long way towards better looking text, but I started to run into type issues, as I documented here. I could run code by copying from the examples, but the minute I tried to pass the graphics context as a parameter it became clear I didn’t actually know what the type of the object was. Looking at the type signature reveals a whole bunch of open generic types. These are all provided by various implementations. This is where I really started getting into the weeds.

A deep dive into graphics in Rust shows that there are many layers, with a lot of different backends abstracted over using generic types. This may be great for code reuse and portability, but I just want to draw some text on the screen. Why is it so hard?

I started by continuing to use the generic types, for example in the FontCache. The problem is that the FontCache is passed through a lot of functions. That meant every place I used it I had to start adding generic types. The generics started to infect my code. Take a look at this type signature for layout_block.

fn layout_block<R:Resources,F:Factory<R>>(&mut self,

containing_block: &mut Dimensions, font_cache:&mut FontCache<R,F>,

doc:&Document) -> RenderBlockBox {

What is Resources? What is Factory? Who knows?

The final straw was realizing that I needed to implementations of FontCache, one for runtime and one for executing unit tests. My code started to become a mess of generic type signatures. Time to start looking again.

Glium and GlyphBrush

It turns out that gfx glyph is one of three implementations. gfx_glyph is for using with APIs built on top of gfx-rs, a low overhead Vulkan like GPU API for rust. But there is also glyph_brush, a render backend agnostic api that just draws text. Then there is glyphbrush_layout, which implements line breaking and other layout functions. So clearly I need to use one of these for drawing the text. But what about the rest of my graphics stack? I don’t want to deal with Metal, Vulkan, DirectX etc. Those are all too new or too platform specific. So that means I need to directly work with OpenGL.

Looking at the Rust Graphics guide again I see there is a library called Glium, a safe wrapper around OpenGL. The library itself isn’t being developed by the original author anymore, but I don't really care. I don’t need the latest cutting edge features. OpenGL itself isn’t being developed anymore either, and I really just need to draw some rects and text, so old but stable sounds good to me. Furthermore, Glium comes with a mostly complete tutorial to get you started.

A bit of googling turned up a port of glyph_brush to glium called glium-glyph. The docs for glium show that it uses glutin for actually opening windows. Okay: so I’ve got three parts: glium, glutin, and glium-glyph. Time to make these all work together.

Unit tests

At the same time I was refactoring all of this graphics code I knew I would need the ability to run unit tests. More research showed that a common way to do this in Rust is to create an enum with two variants, one for app usage and one for unit test usage. Then add any functionality on the enum and make it delegate to the correct implementation at runtime. Any code which calls the enum functions won’t know or care what version it’s using. So I created an enum called Brush.

pub enum Brush {

Style1(glium_glyph::GlyphBrush<‘static, ‘static>),

Style2(glium_glyph::glyph_brush::GlyphBrush<‘static, Font<‘static>>),

}

impl Brush {

fn glyph_bounds(&mut self, sec:Section) -> Option<GBRect<f32>> {

match self {

Brush::Style1(b) => b.glyph_bounds(sec),

Brush::Style2(b) => b.glyph_bounds(sec),

}

}

pub fn queue(&mut self, sec:Section) {

match self {

Brush::Style1(b) => b.queue(sec),

Brush::Style2(b) => b.queue(sec),

}

}

pub fn draw_queued_with_transform(&mut self, mat:[[f32;4];4],

facade:&glium::Display,

frame:&mut glium::Frame) {

match self {

Brush::Style1(b) => b.draw_queued_with_transform(mat,facade,frame),

Brush::Style2(_b) => {

panic!(“can’t actuually draw with style two”)

},

}

}

}

You can see that each method for Brush matches on the brush and delegates to the correct implementation. For things that aren’t supported, like drawing to the screen in the unit test version, it just panics. All the type signatures are hidden from the rest of my code. I don’t end up with the whole generics are infecting my code problem from before.

Lets open a window

I now had three libraries with reasonable documentation. I started by opening a window.

//make an event loop

let event_loop = glutin::event_loop::EventLoop::new();

//build the window

let window = glutin::window::WindowBuilder::new()

.with_title(“some title”)

.with_inner_size(glutin::dpi::LogicalSize::new(WIDTH, HEIGHT));

let context = glutin::ContextBuilder::new();

let display = glium::Display::new(window, context, &event_loop).unwrap();

The font cache is initialized like this

//load a font

let mut font_cache = FontCache {

brush: Brush::Style1(GlyphBrush::new(&display, vec![])),

families: Default::default(),

fonts: Default::default()

};

install_standard_fonts(&mut font_cache);

Notice that even though FontCache is using a particular brush implementation, creatively named Brush::Style1, the signature of FontCache is still just FontCache.

Now I can start an event loop which waits for events and draws the screen over and over. The code uses a lot of complex match patterns which are long, so I’ll just go over one in detail. You can look at the full file here.

The code snippet below watches for a window event. If the event is keyboard input, and the key code is for the Escape key. It sets the contents of control_flow to ControlFlow::Exit. Everything in Glutin uses this type of event handling.

event_loop.run(move |event, _tgt, control_flow| {

*control_flow = ControlFlow::Wait;

match event {

Event::WindowEvent { event, .. } => match event {

WindowEvent::KeyboardInput {

input:

KeyboardInput {

virtual_keycode: Some(VirtualKeyCode::Escape),

..

},

..

}

| WindowEvent::CloseRequested => *control_flow = ControlFlow::Exit,

Drawing Text

To actually draw the text I used gliumglyph, which has a single function drawqueuedwithtransform. The idea is that you traverse the render tree. For each bit of text you want to draw you create a Section object with the font, size, color, and text you want to draw. Then this is added to a queue in the brush. Once per frame the queue is drawn to the screen.

Underneath it caches text runs so if you draw the same text over and over (which is the common case in a browser) it will just reuse the same GPU resources. This makes drawing super fast. The with_transform part lets you pass in a matrix to translate or otherwise manipulate the drawn text. I use it to implement the window scrolling offset.

Drawing Rectangles

To draw rectangles or other shapes we need to use glium directly. It essentially exposes a single function, draw where you pass in a vertex buffer, the indexes into the buffer (if you are using any), the compiled shader program, your uniforms, and any extra draw parameters. Everything is stateless, which makes using OpenGL far nicer than the typical API.

For my purposes I need to draw a bunch of rects so I created an empty vector of Vertexes called shapes. This is then passed through the tree traversal. Anytime a box wants to draw a rectangle it adds some new vertex to the vector. After the tree is processed these vector of vertexes is turned into a proper buffer and drawn.

For the images I do roughly the same thing, but passing a vector of ImageRect objects to the tree traversal. The rectangles can all be drawn as a single call, but since each image uses a different texture I have to make a call for every image. Still, it’s not a lot of overhead because modern OpenGL is so fast.

It looks like this;

for image in images {

let tex:&Texture2d = &image.texture;

let image_uniforms = uniform! { matrix: box_trans, tex: tex };

let img_vertex_buffer = glium::VertexBuffer::new(&display,

&image.vertices).unwrap();

target.draw(&img_vertex_buffer, &indices, &tex_program,

&image_uniforms, &Default::default()).unwrap();

}

Conclusion

So after several iterations I finally have a way to draw pretty 2D text on the screen. It look fantastic, even with custom fonts. The downside is I’m directly using OpenGL. If I didn’t already know the basics of OpenGL it would be a pain to work with. I see why libraries like Geez exist. There is definitely room in this world for someone to create a nice Rust 2D library, and in fact the Geez author has written a whole post about it.

However, for now, I’m happy with where I’ve landed. I can draw lightning fast text that looks great.

And that’s it. We’ve made a full browser end to end. Next time we’ll talk about where to go from here.