IdealOS Mark 3

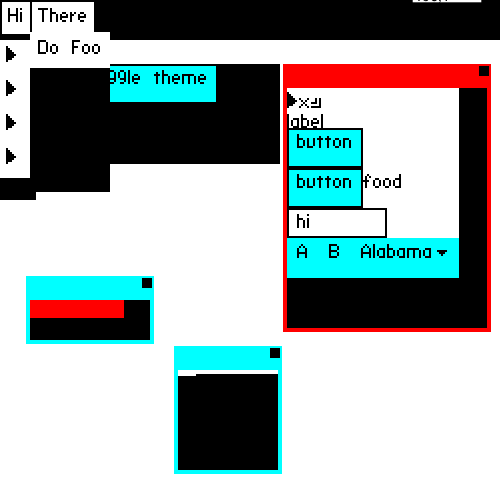

As I mentioned before I’ve gone back to working on the bottom half of Ideal OS. So far I’ve got a messaging protocol, a central server, a few tiny apps, and three different display server implementations. The version I’m calling Mark 3 looks like this:

It has a somewhat working menubar, GUI toolkit with buttons, text input, and labels, a fractal generator, and a little tiny dock to launch apps. More importantly the window manager is fully abstracted from the apps. Apps can draw into a window surface through drawing commands, but they have no control over where the window is or if it’s even visible on the screen. The display server (or it’s delegate) is entirely responsible for drawing to the real screen, positioning and dragging windows, and who gets the input focus. Essentially all apps are untrusted, and must be prevented from interefering with the rest of the system.

This trust nothing model is probably closer to a mobile OS than traditional desktop ones, but I think it’s a better fit for the modern reality of apps that could come from anywhere. It also encourages pulling features out of the apps and into the trusted system. For example, with a built-in system wide database most apps will never need filesystem access at all. They can read and write safely to the database. Most apps don’t need raw keystrokes, they can accept action events form the system, events which might come from a real keyboard, or a scripting system, or my son’s crazy Arduino creations. Hiding that from the apps makes them more flexible.

But enough about ideas. Let’s talk about what actually works.

Messages

Everything is built around typed messages (using code generated from a message schema) sent through out the system over web sockets. It’s horribly inefficient, but incredibly flexible and works across any language or platform. I’ll eventually add more efficient protocols but I plan to always keep web sockets as a backup for maximum flexibility.

Keyboard

Keyboard: I’ve rewritten the keyboard events twice and probably will a few times more. For now I’ve settled on something that resembles the most recent DOM keyboard event spec. It’s not idea, but it works well enough for a simple text box.

Fonts

Fonts: fonts are a hard problem. They involve string parsing, graphics apis, anti-aliasing, and the layout system. If there’s one thing that connects almost everything in the system together, it’s fonts. The whole stack has to be functional for fonts to work right. Since I don’t have a whole functional stack yet, I cheated. I drew some bitmap fonts by hand with my own tiny pixel based font format as JSON files. This works well enough to layout a single line of text at one font size and weight, ie: enough to get the rest of the system running. Obviously fonts are something I’ll revisit later once the graphics system improves.

Graphics

Speaking of which, Graphics! Right now all drawing is done with draw calls from apps to the display server, referencing an x/y point on the apps’ own target windows. Only draw rect, draw pixel, and draw image is supported, but it’s enough to get things working. The display server backs each bitmap with a buffer for fast compositing, but it doesn’t have to work this way. Each app could just draw directly to the screen if the display server wants to do it that way. The API is immediate mode, not retained. If the display server isn’t retaining buffers it can just ask apps to redraw their whole screens. Not efficient of course, but it works.

Text was originally draw pixel by pixel, but now send bitmaps over the wire. In the future bitmaps could be stored as reusable resources, even aggregated into GPU textures. I’m always trying to enable what will work now, while not preventing future optimizations.

GUI toolkit

There are many ways to write a GUI toolkit with buttons and lists and scrolling windows and the like. I don’t have time to write a good one right now, so I’ve made the simplest thing that could possibly work, with no optimizations. Each window has a tree of components and containers. Updating has three phases.

- First keyboard and mouse events from the display server. The window sends these events to the input() method on the correct component in the tree. Each component uses the input to update it’s internal state, then call repaint on its’ parent. Eventually this goes up to the window.

- The window then recursively calls layout() on the component tree. Each child can set its’ own size, but its’ parent can override that size if it chooses. For example, a vertical box will size each of its’ children, then position them vertically. All components are laid out on every pass, there is not optimization or caching.

- Once the layout pass has completed the window recursively calls draw(). This makes each component actually generate the draw calls which are immediately sent to the display server. Currently the display server exhibits flickering because calls are sent continually. In the future these could be batched up or pruned for better performance and no flickering.

Windows

The windows themselves are trickier than GUI components. A component only exists within the app and is used to generate draw calls. The window structure, on the other hand, has to exist in the app, in the display server, and in the main routing server. Further more they must all be kept in sync without letting the app violate any of it’s security restrictions. This is proving trick.

My current strategy is that the main server is the single source of truth. It keeps a list of all apps and their windows and their child windows. Deciding how to size and position windows is left up to the display server, since it has a better idea of what is a good layout. However, the state is still kept in the main server in case the display server crashes or there are multiple outputs. Finally, events for window changes are sent to the app, but the app has no control over the window position or size. It can request certain values but it ultimately takes what the rest of the system decides. This is especially important for things like popup windows where the position really requires knowing what else is on the screen, which of course is invisible to a non-trusted app.

I hope this window abstraction system will enable new window features like splitting them across screens or embedding them securely inside of other apps. What if the widgets in a sidebar were just tiny windows like any other, no special apis needed!

What’s next?

The general philosophy here is using as few abstractions as possible with maximum flexibility. It’s the only way I’ll be able to build a whole system by myself in less than a few lifetimes. I’m not happy with current custom schema system for messages. It’s very clunky and hard to maintain. I plan to switch to JSON schema or similar as it will let me make the messages strongly typed as well as let all system resources be easily editable JSON files instead of custom formats.

Once schemas are integrated I’m going to move all app, theme, keybindings, and translations into JSON files that are easily loaded and searched with a real query language instead of so much custom code.

Onward and upward!

Posted May 27th, 2021

Tagged: idealos